Neural Networks¶

Neural networks (NN) are a more contemporary approach for modeling a time series. Multilayer perceptrons (MLPs) are a basic type of NN for modeling time series.

Examples From Previous Models¶

SLR¶

The following would be used to express SLR

$$ Y_t = \beta_0 + \beta_1 X_{1} + \epsilon $$

MLR¶

The following would be used to express MLR

$$ Y_t = \beta_0 + \beta_1 X_{1} + \beta_2 X_{2} + \beta_3 X_{3} + \epsilon $$

Time Series¶

The following equation would be used to express the MLP for an AR(3).

$$ Y_t = \beta_0 + \beta_1 y_{t-1} + \beta_2 y_{t-2} + \beta_3 y_{t-3} + \epsilon $$

Non-Linear Models¶

It can be shown that a hidden layer can be used to approximate any continuous function. An example of a non-linear NN is shown below.

NN in R¶

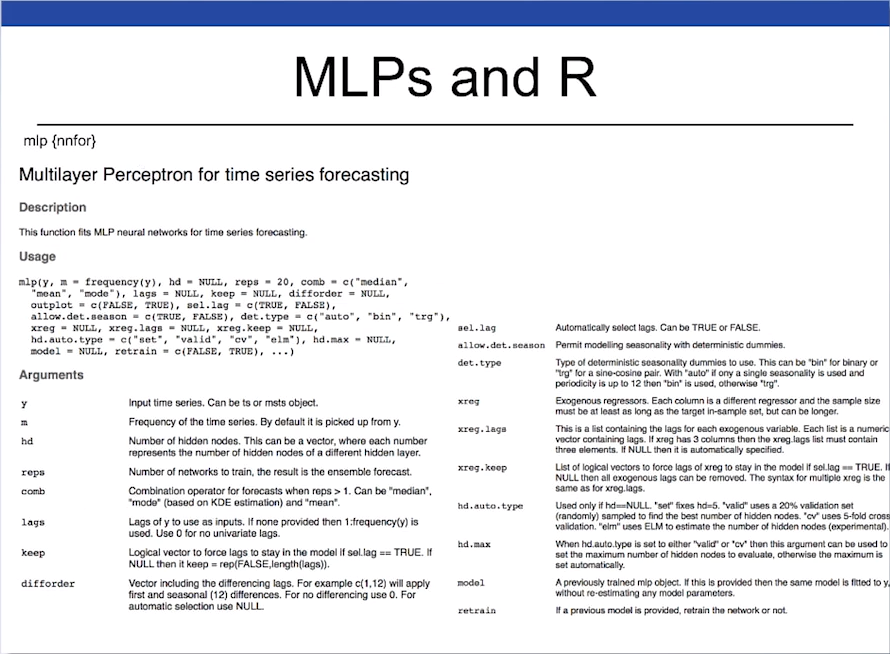

The library nnfor can be used for constructing neural networks.

There are a large number of parameters that can be tuned for NNs, such as hidden layers, activation functions, dropouts, etc.

The following slide should some details on parameters that can be used in nnfor::mlp